Imitating the Canvas Engine (5): Basic Edge Detection

Previous Post:

Imitating the Canvas Engine (5): Basic Edge Detection - Memories of Melon Pan

The edge detection algorithm I used is pretty basic. You could use any number of ways to make your edges, some more complex than others, but for simplicity (and just to get them on the screen), I went for a diagonal gradient on vertex normals and depth values. It's the same as what the XNA Nonphotorealistic Rendering tutorial does, and I thought I'd do it this way because the only other way I know is simple Laplacian edge detection with a 3x3 kernel... which isn't that complex at all.

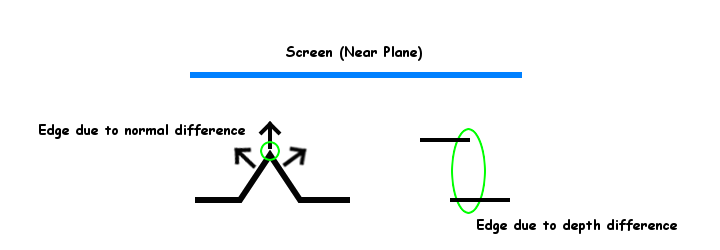

For this edge detection algorithm, we're using vertex normals and depth. The theory is, if adjacent vertices' normals differ by enough (i.e. one points left and its neighbor points right), then you can say that there is an edge in the scene at that point. Also, if you see a big difference in depth, then the objects are likely not connected, meaning another edge is in the scene at that point.

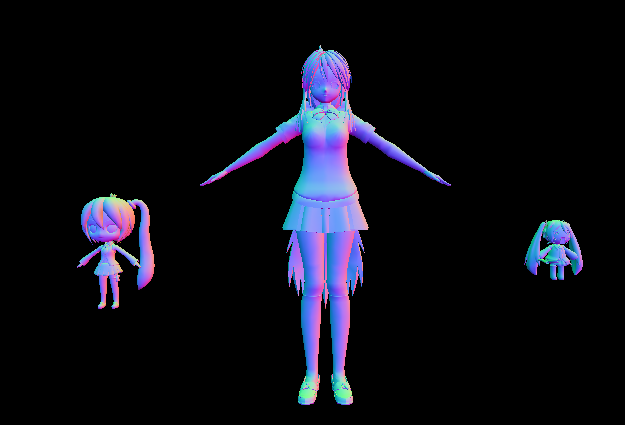

Once again, we'll be using a normal depth map to help us out. Making one is pretty easy, and I talked about it in another post.

Imitating the Canvas Engine (2): Normal Maps and Depth Blur - Memories of Melon Pan

If you don't want to go back to that article though, I'll just repost what that normal depth map looks like when rendered as color.

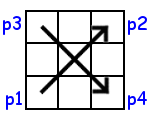

To determine if a pixel contains an edge, we can look at the normals and depths of its surrounding pixels, and see how much they change as we go across them. This is the diagonal gradient talked about earlier. If we take a look at a 3x3 grid of pixels centered on the one we're rendering, this is what our gradients look like.

There are two gradients here: the difference between p1 and p2, and the difference between p3 and p4. All four of these are 4 dimensional vectors, containing the 3 dimensional normal vector at that pixel, plus its depth value as the fourth component.

The difference between these diagonal points, p1 - p2 and p3 - p4, is the amount of change in normal and depth. We're going to do the simple thing and add the two together, and call it our raw difference, Di.

Di = abs(p1 - p2) + abs(p3 - p4)

The absolute values here come from the fact that we only care about how different the two points are, and our calculations shouldn't matter if we instead calculate on p2 - p1. Just keep in mind it's a four dimensional vector, and pretty much everything else we deal with is going to be a scalar value.

At this point, we're down to the two things we're detecting edges on.

- Total difference in normal, dn

- Total difference in depth, dd

And once we have those two, we can calculate a difference score D. The definition of these can be pretty simple...

dn = Di.x + Di.y + Di.z

dd = Di.w

D = dn + dd

If D is greater than some threshold x, then the pixel is on an edge.

... but we can do a little better. First off, changes in depth count a lot more towards an edge than changes in normal, so we can add a multiplier to each of dn and dd. Second, we can add a filter which ignores certain small values for each of dn and dd, so we don't get a partial edge match for some really tiny difference.

The filter can be done by simply subtracting some small value from them, then capping their minimum values to 0.0 so that any potential negative values don't run into other calculations. HLSL comes with a function that will do this for us, and it will cap the maximum values at 1.0 as well.

Which means we end up with this.

Di = abs(p1 - p2) + abs(p3 - p4)

dn = saturate(((Di.x + Di.y + Di.z) - Tn) * Sn)

dd = saturate((Di.w - Td) * Sd)

D = saturate(dn + dd)

For the variables:

- Di, Raw difference in diagonals, a four dimensional vector

- dn, Total difference in normal

- Tn, Minimum threshold value in normal

- Sn, Sensitivity in normal

- dd, Total difference in depth

- Td, Minimum threshold value in depth

- Sd, Sensitivity in depth

- D, Difference score

Where saturate(x) equals:

- 0.0, if x < 0.0

- 1.0, if x > 1.0

- x, otherwise

And we're left a difference score for a pixel that's guaranteed to be between 0.0 and 1.0 (inclusive).

I haven't gotten into edge rendering, but this is the detection part in a nutshell. While it takes a lot of words to describe all the theory behind it, it's not very hard to code. Declarations aside, it can be done in around 20 or 25 lines in HLSL.

Next Post:

Imitating the Canvas Engine (6): Edge Rendering - Memories of Melon Pan