Imitating the Canvas Engine (7): Basic Shadow Mapping

Previous Post:

Imitating the Canvas Engine (6): Edge Rendering - Memories of Melon Pan

Okay... now for the hard part.

If you look at the shadows in Valkyria Chronicles, they all have a penciled-in effect going on. By all appearances, shadows aren't drawn on the screen by dimming the pixel color per se. Instead, the scene is drawn normally, but then a pencil scratch texture is blended in with the scene, which has the characteristic darker pencil streaks you see wherever there's shadow. This means shadow mapping.

There are a bunch of tutorials on how to do shadow mapping online, but I couldn't find one that exactly matched what I needed. The bulk of what I found dealt with point lights defined in the scene itself, but I was using directional lights. Now granted, even when you're using a directional light, you have to assign it to a position in space in order to do calculations on it (almost like making it a point light), but none of these tutorials showed what would be a good way to do that. Even the tutorials I found that said they used a directional light instead used a fixed point light that was located outside the camera's viewing frustum. What if you zoom out?

Maybe I'm overthinking this. Maybe in real life, people normally just use point lights that are far away from the scene and expect players never to rotate the camera there. But well... I don't know that, and I'm curious to figure out the math. So, even with the basic algorithm under my belt, I was gonna have to get creative.

The concept of shadow mapping is pretty easy to explain. Think about what you'd get if you rendered the scene from the point of view of the light, and not the camera. Objects that you see here would be everything in light. If there's some object (or part of an object) that is obscured when rendering this way, that object can not be receiving light... or at least, not from this light source. The only roadblock is that this isn't the scene from the camera.

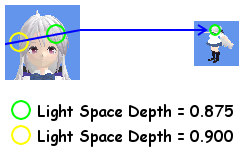

So, now's where we have to think from two separate viewpoints. We have one viewpoint from the light, and we have one from the rendered screen. What if we could figure out what pixel in screen space corresponds to what pixel in light space? What if we could figure out what depth that screen space pixel would be if it were in light space? What if we actually know what depth the corresponding light space pixel ended up being?

As it turns out, we can figure out the first two with a bit of matrix multiplication, and we should already know the last since we rendered the light space scene. And for the final question: what good does all of that do us? Well, think about the depth of that screen space pixel, when it's put into light space. If that's greater than the depth already in that light space pixel, then there must be something between that pixel and the light. This means it's in shadow.

Putting all of this into fine little bullet points:

- Render the scene from the light's point of view, outputting depth and not color.

- Render the scene normally from the point of view of the camera.

- For each screen pixel, find which pixel it would correspond to in the shadow map.

- Find what depth the screen pixel would have been at if it were in the shadow map.

- If the screen pixel's depth is greater than the shadow map's depth, then the screen pixel is in shadow.

Next Post:

Imitating the Canvas Engine (8): Constructing the Shadow Map - Memories of Melon Pan